Article by: Philipp Schlatter, Fermin Mallor, Daniele Massaro, Adam Peplinski, Timofey Mukha, Ronith Stanly, KTH Engineering Mechanics

The pilot phase of the new high-performance computing system LUMI as part of EuroHPC has been extensively used by our group to do some initial studies on complex turbulent flow phenomena, mainly related to turbulence in the vicinity of walls. Turbulence, albeit an engineering topic with a long history dating back to Leonardo da Vinci, is important as ever given societal aspects of efficient transport reducing the CO2 footprint. At the same time, new challenges with potentially radically new airplane designs due to the change towards electrically or hydrogen-driven engines may necessitate improved prediction capabilities of current software systems.

From a computational point of view, new computer systems, such as LUMI-C, but perhaps even more LUMI-G, also require a re-thinking of previously established simulation algorithms. Not only is the exposed parallelism increasing, but also the programming languages and paradigms are rapidly evolving. The focus on high-performance data analytics (HPDA) emphasises the need for efficient IO methods, potentially employing data reduction such as compression or low-order modeling as part of the data generation. All these aspects need to be considered when deploying adapted software packages on systems like LUMI.

In the following, we briefly describe some of the efforts of our group during the (short) pilot testing on LUMI-C, together with some preliminary results. We have studied 5 different but related cases, to expose as many aspects of the setups as possible, in order to gain as much insight into the scaling behaviour of the LUMI machine, and allow comparisons to similar machines in Europe.

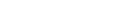

Our first case is the classical turbulent flow around an airfoil at increasingly high Reynolds numbers (or speeds). We chose the infinite-span NACA 4412 wing profile. The main aspect is the use of adaptive mesh refinement (AMR) and non-conformal meshing in the spectral-element method code Nek5000. This is the first application of this code version to high-Reynolds-number turbulent flows. Our present work focuses on the appearance of backflow events under strong adverse pressure gradients (APGs) which is relevant for understanding the initial stages of flow separation (also known as stall). Figure 1 shows regions of backflow events for two different angles of attack, with an impression of the spectral-element mesh in the background.

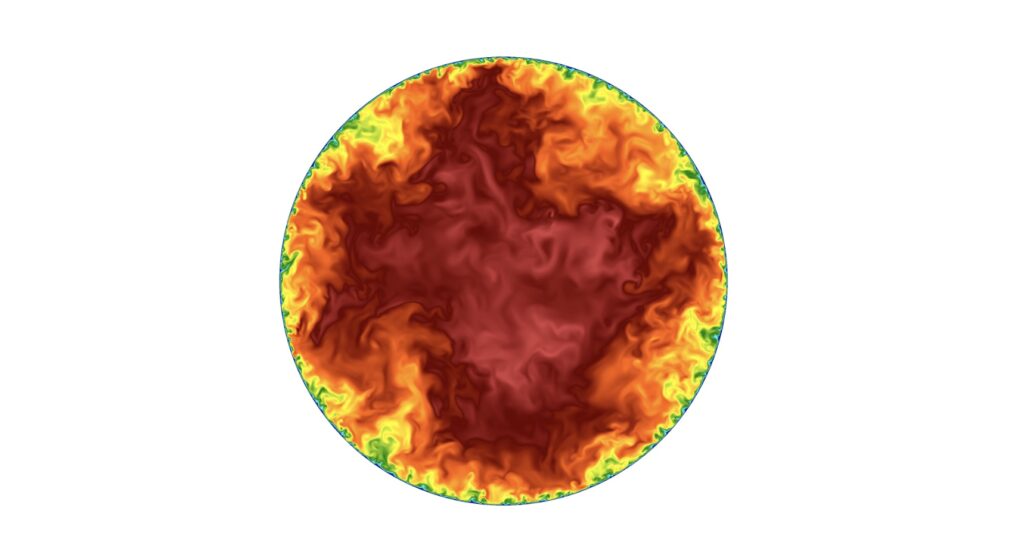

In order to understand the turbulent flow in canonical geometries such as pipes (think of pipelines, but any type of flow in process engineering), we have also performed Direct Numerical Simulations (DNS) of a three-dimensional straight pipe at a high Reynolds number. Together with the spatially developing boundary layer and the plane channel, it is one of three canonical cases in wall-bounded turbulent flows. These are ubiquitous in nature and relevant for many engineering applications. A deeper understanding of the wall mechanisms is necessary since roughly half the energy spent in transporting fluids through pipes, or vehicles through air and water, is dissipated by turbulence in the vicinity of walls. We have analysed the main statistical quantities, evaluating their accuracy by uncertainty quantification analysis and validating the results through a different numerical solver.

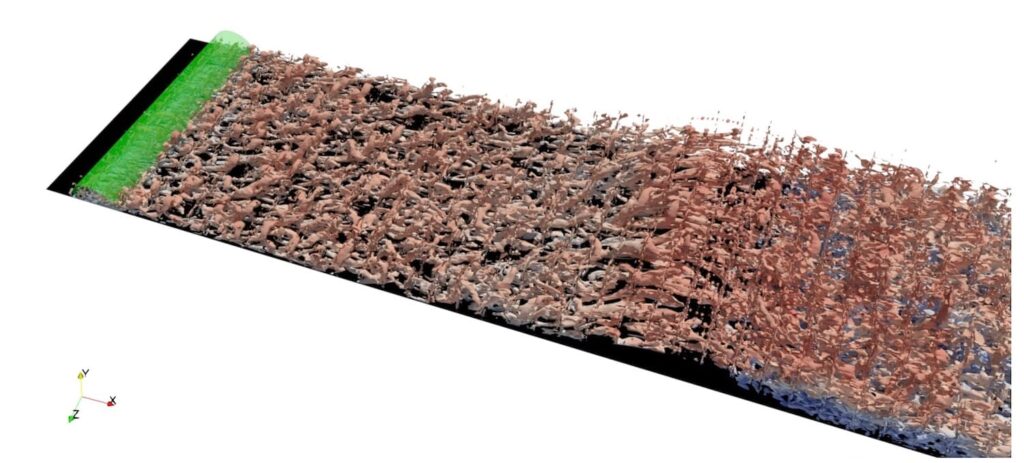

Large-scale simulations of the so-called “Boeing Speed Bump” were carried out to study the complex turbulent flow over the geometry, which resemble surfaces usually seen on airplane wings. Understanding such flows are essential to design more efficient means of transportation. Simulations were carried out using two methods of inflow-turbulence generation, namely boundary layer tripping and using the flow from a precursor simulation at inflow. These simulations helped us to further understand the complexity of the problem and also to plan more targeted simulations for future studies.

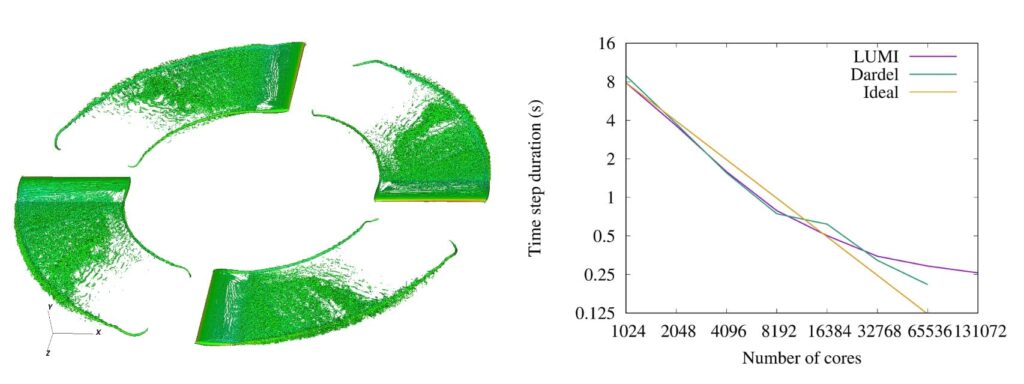

In order to understand the overall performance of our simulation codes, we performed strong scaling tests of a CFD solver Nek5000 using a simplified rotor case at a Reynolds number Re=105. The rotor was built out of four blades with NACA0012 aerofoils and rounded wing tips, and an adaptive mesh refinement (AMR) version of Nek5000 was used. In AMR simulations the mesh is dynamically adjusted during the run to minimise the computational error and to better represent flow features. In case of AMR Nek5000 the resolution is modified by splitting the elements into the smaller ones in the region of high computational error. The strong scaling tests were performed on two computational clusters Dardel (PDC, Sweden) and LUMI (CSC, Finland) with similar architecture. It was carried out with the mesh built of 631712 elements and polynomial order 7. The results are presented in Fig. 4. In both cases, the biggest simulation covered most of the computing cluster (512 nodes out or 554 available for Dardel and 1024 nodes out of 1536 on LUMI). Parallel performance on both clusters is similar and initial super-linear scaling is followed by performance degradation. We can conclude that our AMR version of Nek5000 scales very well on CPU partitions of both Dardel and LUMI down to about 20 elements per MPI rank, which is an excellent number indicated the favourable memory management on the AMD Epyc architectures.

Finally, we have also studied the more standard OpenFOAM software package, used in a variety of academic and industrial applications. We conducted scaling tests of linear solvers in the CFD software OpenFOAM v2106, using up to 16k cores. Excellent scaling and a large performance boost were observed when using bindings to external solvers made available in the PETSc library. The results are submitted for presentation at the 17th OpenFOAM workshop. We have also conducted OpenFOAM wall-modelled large-eddy simulations of boundary layer separation from a smooth ramp. These were performed on a range of grids, with up 470 million computational cells. The results were submitted to an AIAA workshop focused on computing this flow and also for presentation at the ECCOMAS Congress 2022.